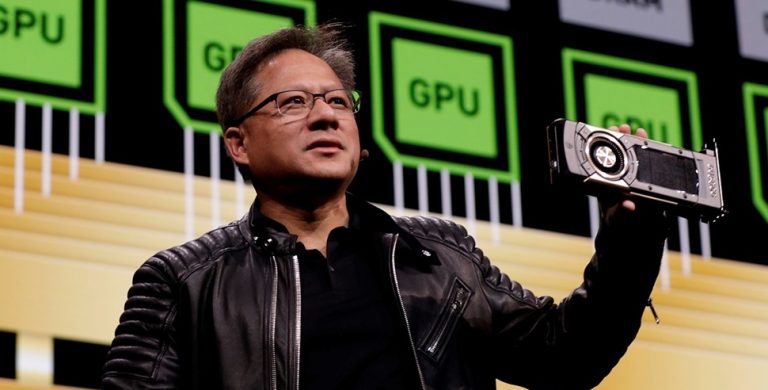

Nvidia Chief Executive Jensen Huang has moved decisively to quash suggestions of strain between the world’s most powerful AI chipmaker and OpenAI, pushing back against reports that Nvidia is reconsidering the scale of its financial and strategic commitment to the ChatGPT creator at a critical moment for the artificial-intelligence industry.

Huang’s remarks came after The Wall Street Journal reported that Nvidia was stepping back from a previously announced plan to invest as much as $100 billion in OpenAI and to help build 10 gigawatts of AI computing infrastructure. The report suggested Huang had privately emphasized that the agreement was nonbinding, raised concerns about OpenAI’s business model, and pointed to growing competition from rivals such as Anthropic and Google. It also said discussions had shifted toward a smaller equity investment, potentially still in the tens of billions of dollars, rather than the full headline figure.

Speaking to reporters in Taipei on Saturday, Huang dismissed the notion outright. He described the idea of friction with OpenAI as “nonsense” and stressed that Nvidia would “definitely participate” in OpenAI’s next funding round, according to Bloomberg. He framed the investment not as a defensive move, but as a conviction bet on what he called one of the most important companies of the modern era.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

“We will invest a great deal of money,” Huang said. “I believe in OpenAI. The work that they do is incredible. They’re one of the most consequential companies of our time.”

Huang declined to specify how much Nvidia would ultimately commit, deferring instead to OpenAI CEO Sam Altman on the size and structure of the fundraising. That reticence underscores a central tension now facing the AI sector: capital needs are exploding, but even the largest technology firms are wary of locking themselves into rigid, long-term commitments as the competitive landscape shifts rapidly.

OpenAI, meanwhile, has sought to project stability. A spokesperson told the Journal that Nvidia and OpenAI are “actively working through the details of our partnership,” adding that Nvidia “has underpinned our breakthroughs from the start, powers our systems today, and will remain central as we scale what comes next.” The statement reinforces the reality that, whatever the precise equity arrangements, OpenAI remains deeply dependent on Nvidia’s hardware to train and run its most advanced models.

The backdrop to the dispute is OpenAI’s extraordinary funding ambitions. In December, the Wall Street Journal reported that the company was seeking to raise up to $100 billion, a sum that would eclipse any previous private funding round in Silicon Valley. The New York Times reported this week that Nvidia, Microsoft, Amazon, and SoftBank are all in discussions about participating, highlighting how OpenAI has become a focal point for capital across the tech and finance worlds.

Each of those relationships carries its own strategic complexity. Microsoft remains OpenAI’s largest shareholder, holding roughly 27 percent following a restructuring last year, and continues to supply much of its cloud infrastructure. Amazon has signed a $38 billion, seven-year deal to provide AWS capacity and is reportedly weighing a further multibillion-dollar equity investment. SoftBank founder Masayoshi Son is said to be close to committing as much as $30 billion, extending his long-standing bet on transformative technologies.

However, the calculus is different for Nvidia. Unlike cloud providers that are both investors and customers, Nvidia’s dominance rests on selling chips and systems to everyone building large-scale AI. That position gives it immense leverage but also exposes it to accusations of favoritism if it appears too closely aligned with a single model developer. The Journal report’s suggestion that Huang has been vocal about competition from Anthropic and Google fits with Nvidia’s public stance that the AI ecosystem will remain plural, not winner-take-all.

Analysts say this helps explain why Nvidia has emphasized flexibility. Even if the original $100 billion figure was aspirational rather than contractual, the scale of OpenAI’s infrastructure plans is real. Training frontier models now requires tens of billions of dollars in compute, power, and data-center build-out, costs that are rising as models grow more complex and inference demand surges globally.

Huang’s comments in Taipei also come as Nvidia faces intensifying scrutiny from governments and regulators over its central role in AI supply chains. U.S. export controls, particularly on advanced chips destined for China, have already reshaped Nvidia’s product strategy. Any perception that Nvidia is wavering on its biggest AI customer could ripple through markets that view OpenAI as a bellwether for the sector’s growth trajectory.

Instead, Huang chose to send a message of confidence and continuity. He signaled both belief in the company’s mission and caution about the form that support will take by publicly backing OpenAI while declining to lock in a number. That balancing act reflects the broader state of the AI boom: enormous opportunity, unprecedented capital requirements, and strategic relationships that remain fluid rather than fixed.