The global AI industry was thrown into turmoil in January 2024 when DeepSeek, a Chinese artificial intelligence company backed by hedge fund High-Flyer, released R1, a powerful open-source reasoning model.

What made R1 revolutionary was not just its capabilities, but the fact that it had been developed using significantly weaker chips and a fraction of the funding used by Western AI firms like OpenAI, Anthropic, and Google DeepMind.

DeepSeek’s unexpected success sent ripples across the tech industry, raising questions about whether the AI boom—fueled by the belief that bigger, more expensive computing power leads to smarter AI—was about to be disrupted by a more cost-effective approach.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

Global investors reacted swiftly and dumped shares of AI chipmakers, particularly Nvidia, whose market dominance has been built on the assumption that more powerful hardware is essential for AI progress.

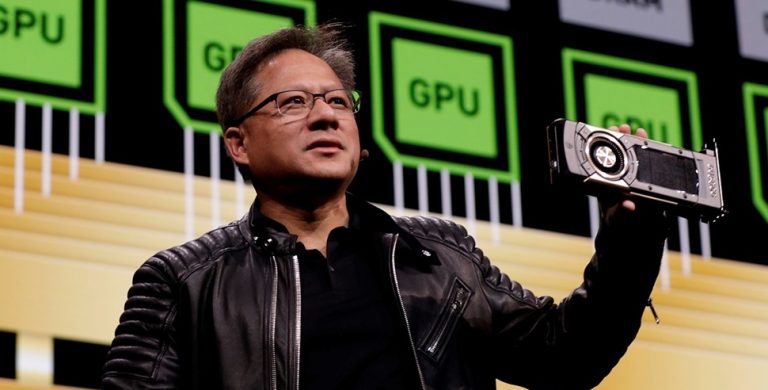

The result? Nvidia’s market capitalization plunged by $600 billion, the worst single wipeout in its history, and CEO Jensen Huang’s personal fortune took a nearly 20% hit.

But according to Huang, this was a massive overreaction based on a flawed understanding of how AI models work.

Huang: “Investors Took Away the Wrong Message”

During a pre-recorded interview aired as part of an event by Nvidia partner DDN to introduce its new Infinia software platform, Huang pushed back against investor fears, arguing that the market misinterpreted what DeepSeek’s achievement actually meant for AI’s future.

“From an investor perspective, there was a mental model that the world was pre-training and then inference. And inference was: you ask an AI a question, and you instantly got an answer,” Huang said. “I don’t know whose fault it is, but obviously that paradigm is wrong.”

In other words, investors wrongly assumed that AI’s reliance on high-powered computing had been fundamentally disrupted—but according to Huang, that couldn’t be further from the truth.

He explained that while DeepSeek’s R1 demonstrated that pre-training can be done with fewer resources, it does not mean AI models can function at scale without advanced computing infrastructure.

Instead, the real challenge in AI development today lies in post-training methods, which allow AI systems to refine their intelligence, improve their reasoning, and make more accurate predictions after their initial training phase.

“Pre-training is still important, but post-training is the most important part of intelligence. This is where you learn to solve problems,” Huang emphasized.

Post-training techniques—such as reinforcement learning, fine-tuning, retrieval-augmented generation (RAG), and self-improvement algorithms—are what truly make AI models more reliable, intelligent, and efficient over time.

And unlike pre-training, post-training requires even more computational power, meaning that the demand for high-performance AI chips, like those produced by Nvidia, will only continue to grow.

“Post-training methods are really quite intense,” Huang noted. “The demand for computing power will continue to grow as AI models improve their reasoning abilities.”

Why DeepSeek’s Breakthrough Isn’t a Threat to Nvidia

Rather than seeing DeepSeek R1 as a threat to Nvidia’s dominance, Huang insisted that its success was actually a positive development for the AI industry.

“It is so incredibly exciting. The energy around the world as a result of R1 becoming open-sourced — incredible,” he said.

His stance echoed that of Lisa Su, CEO of Nvidia’s biggest rival AMD, who recently addressed similar concerns by stating: “DeepSeek is driving innovation that’s good for AI adoption.”

Huang’s comments come amid an ongoing debate in the AI world about whether AI scaling—the process of improving models by using more data and computing power—is slowing down.

In late 2023, reports emerged that OpenAI’s latest advancements were hitting a plateau, leading to speculation that the AI boom might not deliver on its promise. That, in turn, raised fears that Nvidia’s AI-fueled revenue explosion could lose momentum.

But Huang has consistently pushed back against this narrative, arguing that the focus of AI scaling has simply shifted from training to inference and post-training.

“Scaling is alive and well; it has just moved beyond training,” Huang said in a previous speech.

He indicated that by focusing on post-training intelligence, improved reasoning, and self-learning models, the AI industry will continue to require powerful chips, ensuring that Nvidia’s hardware remains essential to AI progress.

What This Means for Nvidia’s Upcoming Earnings Report

Huang’s remarks come just days ahead of Nvidia’s first earnings call of 2025, scheduled for February 26.

DeepSeek’s impact has already been a major discussion point on earnings calls across the tech sector, with companies from Airbnb to Palantir addressing its implications.

With Nvidia’s dominance in AI hardware facing renewed scrutiny, Huang’s comments denote that the company will double down on post-training as a core pillar of its long-term AI strategy.

The market has already begun to correct itself, with Nvidia’s stock recovering much of its lost value.

However, the biggest test will come on February 26, when Nvidia’s earnings report will reveal whether investor fears were truly unfounded—or if the AI industry’s computing revolution is entering a new phase that Nvidia must adapt to.