Editor’s Note: This is an exclusive article for Tekedia that we hope will help our readers understand the processes that take place as ideas evolve into investments. Mr. Aoaeh wrote this piece while an MBA student in New York Stern Business School. Though nanotechnology may not be applicable in your local market in Africa, the steps in this article are universal.

Introduction

I became exposed to nanotechnology during my days as an undergraduate student at Connecticut College, in New London, Connecticut. I pursued a double major in Physics and Mathematics, and had the good fortune of working as a research laboratory assistant in the Tunable Semiconductor Diode-Laser Spectroscopy lab, which is run by Professor Arlan W. Mantz, Oakes Ames Professor of Physics, and erstwhile chair of the Physics Department. My involvement with the lab spanned three years, and that experience played a critical role in my education.

Executive Summary: Investors ought to become aware of the opportunities and risks presented by the budding field of nanotechnology.

What is it?

The term nanotechnology refers to a group of scientific processes that enable products to be manufactured by the manipulation of matter at the molecular level – at the nanoscale. One nanometer represents a length of 10-9 meters – one billionth of a meter[i]. Nanotechnology enables the manipulation of matter at or below dimensions of 100 nanometers. Nanotechnology draws from a multitude of scientific disciplines – physics, chemistry, materials science, computer science, biology, electrical engineering, environmental science, radiology and other areas of applied science and technology.

There are two major approaches to manufacturing at the nanoscale;

- In the “bottom-up” approach, nanoscale materials and devices assemble themselves from molecular components through molecular recognition – small devices are assembled from small components.

- In the “top-down” approach materials and devices are developed without the manipulation of individual molecules – small devices are assembled from larger components.

Where is Activity Concentrated?

Research into nanotechnology and its applications is growing rapidly around the world, and many emerging market economies are sparing no effort in developing their own research capacity in nanotechnology.

- Naturally, the U.S., Japan, Western Europe, Australia and Canada hold an advantage, in the short term.

- China and India have made significant progress in establishing a foundation on which to build further capability in nanotechnology – A 2004 listing puts them among the top 10 nations worldwide for peer-reviewed articles in nanotechnology[ii].

- South Africa, Chile, Mexico, Argentina, The Philippines, Thailand, Taiwan, The Czech Republic, Costa Rica, Romania, Russia and Saudi Arabia have each committed relatively significant resources to developing self-sufficient local nanotechnology industries.

Why should investors care?

Fundamentally, investors should pay attention to nanotechnology because of its high potential to spawn numerous “disruptive technologies.” Nanoscale materials and devices promise to be;

- Cheaper to produce,

- Higher performing,

- Longer lasting, and

- More convenient to use in a broad array of applications.

This means that processes that fail to provide results comparable to those available through nanotechnology will become obsolete rather quickly, once an alternative nanoscale process has been perfected. In addition, companies that fail to embrace and apply nanotechnology could face rapid decline if their competitors adopt the technology successfully.

The United States Government has maintained its commitment to fostering U.S. leadership and dominance in the emerging fields of nanoscale science. In its 2006 budget, the National Nanotechnology Initiative, a multi-agency U.S. Government program, requested $1.05 Billion for nanotechnology R&D across the Federal Government[iii]. That amount reflects an increase from the $464 Million spent on nanotechnology by the Federal Government in 2001.

Applications of Nanotechnology

Nanotechnology’s promise to revolutionize the world we live in spans almost every aspect of human endeavor. Today, nanotechnology is applied in as many as 200 consumer products.[iv]

- Carbon nanotubes can be used to fabricate stronger, lighter materials for use in automobile bodies, for example.

- Researchers at Stanford University have killed cancer cells using heated nanotubes, while EndoBionics, a US firm, has developed the MicroSyringe for injecting drugs into the heart.[v] Other applications in medicine and biotechnology are in the pipeline.

- MagForce Technologies, a Berlin based company has developed iron-oxide particles that it coats with a compound that is a nutrient for tumor cells. Once the tumor cells ingest these particles, an external magnetic field causes the iron-oxide particles to vibrate rapidly. The vibrations kill the tumor cells, which the body then eliminates naturally.[vi]

- Cosmetics companies are actively engaged in the exploration of nanotechnology as a source of enhanced products. For example, to produce cosmetics that can be absorbed more easily through human skin and that exhibit longer lasting properties.

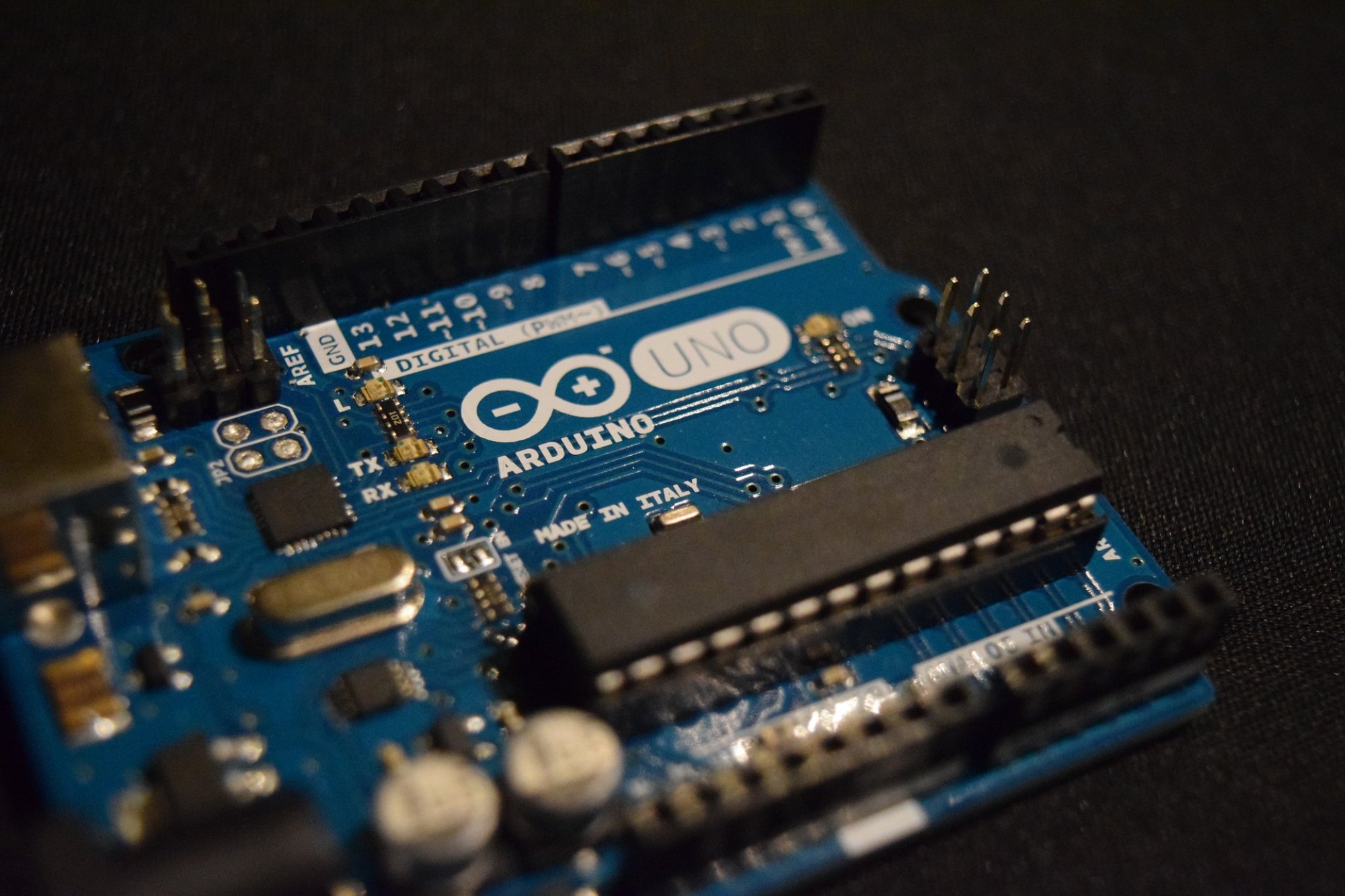

- Advanced Micro Devices and Intel both use nanotechnology in the design and production of their most recent line of computer chips. Hewlett Packard is also actively involved in research to vastly enhance storage capacity on its computers and other electronic devices through nanoscale applications.

- Nanotechnology has been applied in the garment industry to produce stain resistant fabrics, for example.

- Nanotechnology companies in the developing world are pursuing solutions to problems peculiar to the developing world – for example, an Indian company is working on a prototype kit for diagnosing tuberculosis. There is great potential for the application of nanotechnology to agriculture.

- Nanostructures are used in the production of Organic Light Emitting Diode (OLED) screens. OLED displays are replacing Liquid Crystal Displays in consumer products such as mobile phones, digital media products, and computer monitors

Threats

In spite of its promise, nanotechnology faces threats that could impede its advance. Among these[vii], [viii];

- It is not yet clear how nanotechnology will affect the health of workers in industries in which it is applied. For example, how should we assess exposure to nanomaterials? How should we measure the toxicity of nanomaterials?

- Public agencies and private organizations do not have a clear sense of how further progress in nanotechnology will affect the environment, or of the public safety issues that will accompany an expanded use of nanotechnology in industrial, medical and consumer applications. For example, what factors should risk-focused research be based on, and how should we go about creating prediction models to gauge the potential impact of nanomaterials?

- The complexity of the science that is integral to nanotechnology makes it a very difficult area to regulate. It is likely that firms involved in the pursuit of nanoscale applications in medicine and pharmaceutics will face long delays in obtaining regulatory approval for the wide scale use of their products.

- The complexity of nanotech-related patents has led to a backlog at the U.S. Patent Office. It now takes 4 years, on average, to process a patent application – about double the waiting time in 2004. This could lead to situations in which a firm’s intellectual property becomes public before it comes under patent protection, thus eroding any competitive advantages that it had over its rivals.[ix]

- It is not yet clear how society can protect itself from the abuse of nanotechnology. The public sector needs to collaborate with the private sector in developing protective mechanisms to guard against “accidents and abuses” of the capabilities of nanoscale processes and materials.

A note to would be investors

The average investor must remain keenly aware that firms involved in nanotechnology will have to assign significant resources to research and development. There is no reliable means of predicting the ultimate outcome of such activities, and the probability that any firm can maintain an enduring edge over its competitors is small. Investors should expect the mantle of leadership in innovation to change with a relatively high frequency. As such, pure-play nanotechnology firms will need to pay critical attention to means of sustaining market dominance that go beyond core competence in the science of nanotechnology.

Lux Research estimates that revenues from products using nanotechnology will increase from $13 Billion in 2004 to $2.6 Trillion in 2014. The 2014 estimate represents approximately 15% of global manufacturing output.[x].

In 2005, Lux Research and PowerShares Capital Management launched a nanotech ETF – The PowerShares Lux Nanotech Portfolio (PXN). In addition, Lux Research measures the performance of publicly traded companies in the area of nanotechnology through the Lux Nanotech IndexTM, a modified equal dollar weighted index of 26 companies. The companies in this index earn profits by utilizing nanotechnology at various stages of a nanotechnology value chain[xi];

- Nanotools – Hardware and Software used to manipulate matter at the nanoscale.

- Nanomaterials – Nanoscale structures in an unprocessed state.

- Nanointermediates – Intermediate products that exhibit the features of matter at the nanoscale.

- Nano-enabled Products – Finished goods that incorporate nanotechnology.

Companies in the index are further classified as

Investors must note that the investment characteristics of Nanotech Specialists are likely to differ markedly from those of End-Use Incumbents. The end-use incumbents that are part of this index include 3M, GE, Toyota, IBM, Intel Hewlett-Packard, BASF, Du Pont, and Air Products & Chemicals. Because these companies have large, well-established and significant operations in arenas that do not rely heavily on nanotechnology, investors can expect them to achieve financial results that are only moderately volatile. In contrast the financial performance of nanotech specialists will exhibit highly volatile swings, because;

- With the exception of companies in the “picks and shovels” segment of nanotechnology, much of the work that many nanotech specialists engage in is still in the “trial and error” phase, and

- There is no reliable means of predicting the results that heavy investment in R&D will yield.

Finally, it is likely that financial valuations of nanotech firms will fail to capture the true value of the intangible assets that provide the bedrock of each company’s ability to sustain innovation, create economic value, and protect its competitive advantage. If nanotechnology is truly the way of the future, then investors must embrace that future with enthusiasm that is layered with caution by;

- Performing an extra amount of due diligence before committing significant funds to investments in individual nanotechnology companies,

- Limiting such investments to companies in the U.S., Japan, Canada, Western Europe, and Australia, in the near term, and

- Following developments in the nanotechnology initiatives of the BRIC block of emerging market economies without committing any funds until a clear assessment of the future prospects of individual investment opportunities becomes possible.

Individual investors must exercise an extra amount of caution in pursuing nanotech investments, and should not commit more than they can afford to lose. Most individual investors with a desire to invest in nanotechnology should do so through PXN and similar instruments. Institutional investors must bring all their resources to bear in assessing the viability of a nanotech investment strategy prior to committing funds to this nascent area. For added security, individual investors that seek to invest in publicly traded nanotech companies should seek firms with the following characteristics;

- No debt, and positive cash flows, and evidence of an ability to sustain profits.

- Companies that supply corporate customers must not be too reliant on one customer.

- Founders and insiders should have a significant and increasing portion of their net worth at stake in the company, and a track record in multi-disciplinary research.

A Cautionary Tale

Nanosys called off plans to sell 6.25 Million shares to the public on August 4, 2004. Merrill Lynch led a team of investment banks in the I.P.O effort, which would have given the public a 29 percent stake in the company for shares priced between $15 and $17 each. Nanosys had hoped to raise $115 Million.

Investors may draw the following lessons from that occurrence;

- Many nanotech companies face an up-hill task in converting promising research into products that can sustain a steady revenue stream.

- A considerable number of nanotech companies may be surrounded by “more hype than substance”.

- There is no guarantee that the price investors pay for an investment in nanotech will be adequate, once all associated risks are taken into account. For example, stock price volatility of nanotech companies may exhibit greater systematic tendencies than normal. Other publicly traded nanotech companies saw declines in their stock price when Nanosys cancelled its I.P.O in 2004; Nanogen fell 99 cents to $3.81, Nanophase Technologies fell 31 cents to $5.69, and Harris & Harris, a publicly traded investment group with a nanotech focus fell 85 cents to $8.53 – Harris & Harris owned 1.58 percent of Nanosys[xii].

A Note on Patents[xiii]

While it is true that there are serious delays in the processing of patents filed with the U.S. Patent Office, each patent that is filed is published automatically 18 months after the patent office receives the filing. This notifies the public that the process or product that is the subject of that filing has some probability of gaining patent protection. In legal parlance the owner of the patent application is said to have acquired “provisional rights”, and the term “patent pending” may be applied to the product or process. Companies and individuals may not launch lawsuits based on provisional rights. However, once the patent is approved the patent’s owner may sue for infringement of the patent going back to the date on which the patent came under “provisional rights” status. In essence, once an individual or company obtains provisional rights on a patent filing, they may begin to issue “cease-and-desist” warnings to competitors that may be attempting to appropriate the inventor’s intellectual property.

Uncertainty poses the real drawback from the delays in the U.S. Patent Office’s ability to process patent applications more quickly. While companies and individuals get provisional rights after 18 months, there is no guarantee that any given patent application will win eventual approval, or even that it will be reviewed within 4 years. Therefore, companies cannot rely on obtaining intellectual property rights to the invention. This may force them hedge their bets in some other fashion.

Many risks accompany investments in nanotechnology. However, if nanotech is to be believed, it may yield significant returns to those investors that learn to harness its power.

December 22, 2006

[i] For perspective, 100nm represents about 1000-1 of the width of a human hair.

[ii] Hassan, Mohamed H. A., Small Things and Big Changes in The Developing World. Science,Vol. 309 no. 5751, July 1 2005, accessed on December 19, 2006 at http://www.sciencemag.org/cgi/content/full/309/5731/65

[iii] The National Nanotechnology Initiative, Research and Development Leading To A Revolution in Technology and Industry, Supplement to The Presidents FY 2006 Budget

[iv]http://www.nanotechproject.org/index.php

[v] Bradbury, Danny, A Mini Revolution. The Independent (London) May 24, 2006.

[vi] Feder, Barnaby J., Doctors Use Nanotechnology To Improve Health Care. The New York Times, November 1, 2004. December 22, 2006.

[vii] Scientists Question Safety Of Nanotechnology, www.macnewsworld.com, November 20, 2006, accessed on December 22, 2006.

[viii] Markoff, John, Technologists Get A Warning And A Plea From One of Their Own, New York Times, March 13, 2000.

[ix] Van, Jon, Nanotechnology Hits A Patent Roadblock, Chicago Tribune, November 27, 2006.

[x] Gosh, Palash R, How To Invest In Nanotech, www.businessweek.com, April 17, 2006, accessed on December 22, 2006.

[xi] Adapted from www.luxresearchinc.com.

[xii] Feder, Barnaby J., Nanosys Calls Off Initial Public Offering, New York Times, August 5, 2004.

[xiii] Wesley T. McMichael provided the explanation that forms the basis for this section. Wes and I were classmates at Connecticut College. He majored in Physics, with a minor in Computer Science. He now practices patent law.

Like this:

Like Loading...