Surging demand for electricity from GPU-heavy data centers is outpacing grid capacity, leaving racks of high-performance chips idle “going dark” and forcing operators to build their own power infrastructure.

This isn’t speculation—it’s already happening, driven by the explosive growth of AI training and inference workloads. As of November 2025, U.S. data centers could face a 36-45 GW shortfall by 2028, equivalent to powering 25-33 million homes, with AI accounting for over 70% of new demand.

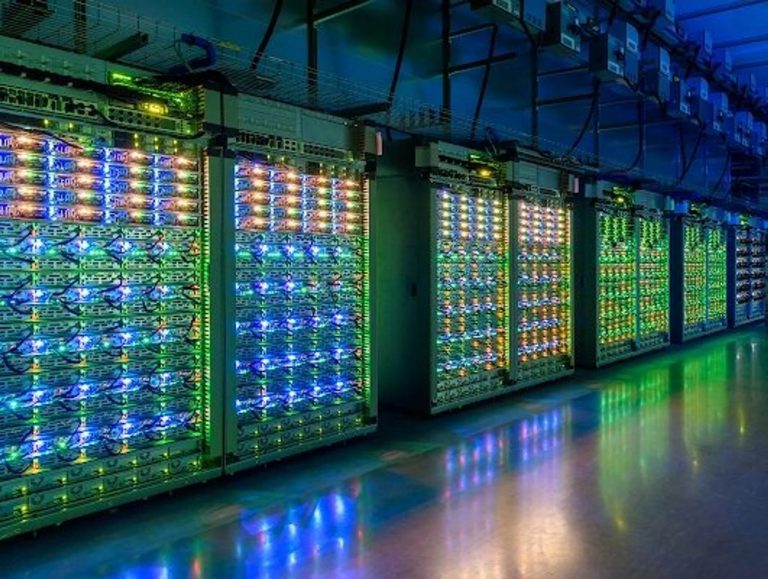

AI data centers rely on thousands of GPUs (e.g., Nvidia H100s or Blackwell chips) running in parallel, each consuming 700-1,000 watts—far more than traditional servers. A single large AI training cluster can draw 30-200 MW, comparable to a small city’s needs.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

But the U.S. grid, aging and fragmented, can’t keep up. Over 12,000 projects 1,570 GW of generation capacity are queued for grid hookup, with waits stretching to 2030 due to permitting delays, equipment shortages (e.g., transformers with 2-year lead times), and transmission constraints.

In PJM Interconnection serving 13 states, data centers alone will drive 30 GW of new demand by 2030, but the grid lost 5.6 GW of capacity in the last decade from premature plant closures. In Silicon Valley, facilities like Digital Realty’s SJC37 designed for 48 MW and CoreSite’s SV7 up to 60 MW sit partially empty because local utilities can’t deliver power.

Globally, moratoriums in places like Amsterdam and Singapore halt new builds outright due to grid limits. Vacancy rates for data centers have hit a record low of 2.3%, but power shortages inflate costs and delay ROI.

Morgan Stanley warns of a “critical bottleneck,” with AI infrastructure investments such as the $800 billion from Alphabet, Amazon, Meta, Microsoft, and OpenAI in 2025 at risk of stalling without energy fixes. This mismatch means GPUs—costing millions per cluster—remain powered off, wasting capital and slowing AI progress.

As one energy consultant put it, companies are now advised to “grab yourself a couple of turbines” to bypass the grid. From Desperation to strategy faced with 5-10 year waits for grid upgrades, tech giants are adopting “behind-the-meter” off-grid solutions, generating power on-site or co-locating with dedicated plants.

This “Bring Your Own Power” (BYOP) trend is reshaping energy markets, with projections of 35 GW self-generated by data centers by 2030. $500B West Texas supercluster; bypassing grid for rapid deployment. Up to 10 GW matches NYC peak summer demand; construction underway for 2026 online.

Memphis facilities; quick-build to fuel Grok training. Multi-GW scale; operational since mid-2025, avoiding local grid strain. Deployed at 12+ U.S. sites for backup and primary power. 10-50 MW per site; reduces grid reliance by 20-30%.

Partnering with existing/reactivated plants (e.g., Three Mile Island restart). 1-2 GW dedicated; aims for carbon-neutral AI by 2030. Commissioning new builds to power Virginia hubs. 1 GW+; criticized for emissions but prioritized for speed.

On-site generation for hyperscalers; demand up 10x since 2024. Scalable to 100 MW+ per facility; 60% efficiency vs. grid baselines. These moves are pragmatic but controversial: gas and diesel generators raise emissions potentially delaying coal retirements, while nuclear promises cleaner baseload power but faces regulatory hurdles.

China, investing twice as much in grid/power per IEA, avoids this chaos through centralized planning, highlighting U.S. lags in permits and supply chains.Broader Implications and OutlookThis shift could accelerate AI dominance for agile builders but risks a “power bubble”—trillions in data center capex without matching energy investment.

Utilities may hike rates for households up 10-20% by 2030, and regions like Northern Virginia face blackouts. Positives include innovation: small modular reactors (SMRs) could add 190 TWh for data centers, and efficiency gains (e.g., Nvidia’s co-packaged optics) might cut GPU power use 20-30%.In short, your prediction is spot-on and unfolding now.

The grid’s “not ready” for AI’s hunger, so data centers aren’t waiting—they’re becoming mini-utilities. If trends hold, expect more “energy Wild West” plays, from turbine farms to fusion pilots, to keep those GPUs lit.