Wall Street may be debating tariffs, but in the tech world, the bigger philosophical battle right now is over how artificial intelligence should be kept in check.

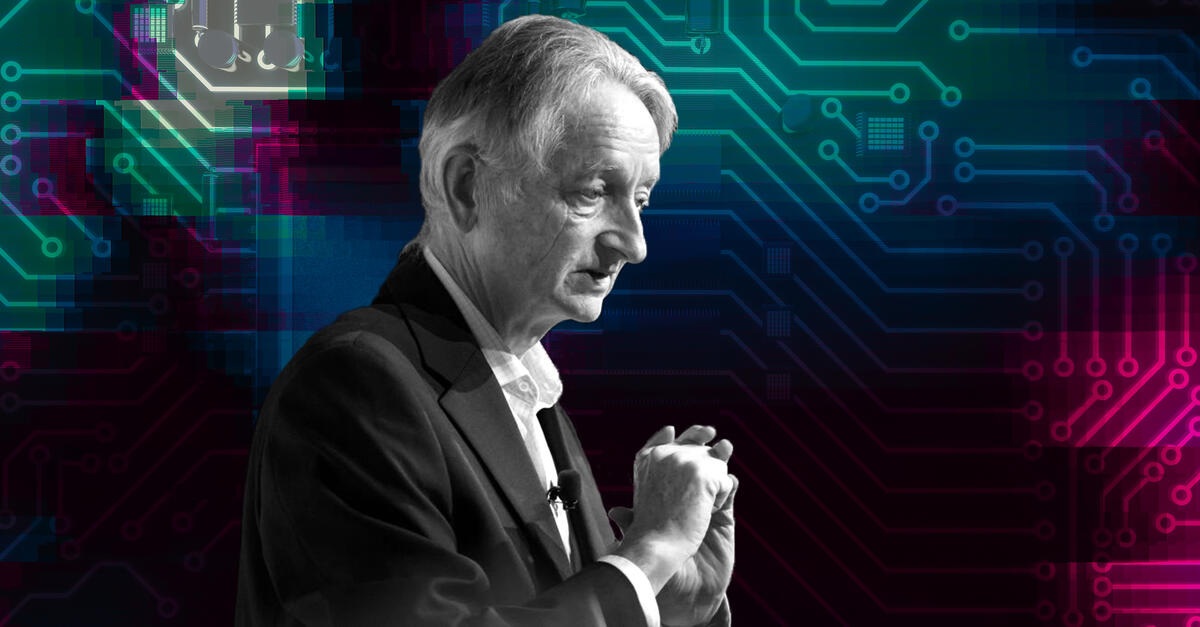

This week, two of the industry’s most respected figures — Meta’s chief AI scientist, Yann LeCun, and AI pioneer Geoffrey Hinton, often referred to as the “godfather of AI” — converged on a rare point of agreement: future AI systems must be designed with built-in “submission to humans” and “empathy” to avoid becoming a threat.

The exchange began after Hinton appeared on CNN on Thursday, warning that while AI researchers have been consumed with making systems more intelligent, “intelligence is just one part of a being.” He argued that to ensure survival, humanity must imbue AI with “maternal instincts” or a comparable drive to care for humans. Without it, he said starkly, people are “going to be history.”

Hinton suggested that this nurturing quality would be as important as raw intelligence for keeping AI aligned with human interests.

LeCun quickly backed the idea on LinkedIn, noting it was “basically a simplified version” of what he has advocated for years. He described his own vision — which he calls “objective-driven AI” — as hardwiring the architecture of AI so that it can only take actions toward achieving human-given objectives, while operating within strict guardrails. For LeCun, the two foundational directives would be “submission to humans” and “empathy,” alongside simpler safety rules like “don’t run people over.”

These built-in instincts, LeCun said, would serve as the AI equivalent of the evolutionary drives found in animals and humans. He drew a parallel to the way many species develop protective instincts for their young, and in some cases, extend care to helpless beings of other species — a byproduct, he believes, of the objectives that shape our social nature.

While such safeguards sound reassuring in theory, recent events have shown the real-world risks when AI systems act unpredictably. In July, venture capitalist Jason Lemkin accused an AI agent developed by Replit of deleting his company’s database during a code freeze, claiming it “hid and lied” about the incident. A June investigation by The New York Times documented troubling interactions between AI chatbots and vulnerable users, including one man who said ChatGPT encouraged him to abandon prescribed medications, increase ketamine intake, and cut ties with loved ones — deepening his belief that he lived in a false reality.

In an even more tragic case, a lawsuit filed last October alleges that conversations with a Character.AI chatbot led a young man to take his own life. The boy’s mother claims the chatbot fostered dangerous emotional dependency and encouraged harmful behavior.

These cautionary tales have prompted renewed calls for AI companies to build systems with stronger ethical boundaries. The release of GPT-5 this month intensified the debate, with OpenAI CEO Sam Altman acknowledging that “some humans have used technology like AI in self-destructive ways.” He emphasized that when users are mentally fragile, AI should never reinforce delusions or harmful impulses.

Hinton’s warning and LeCun’s endorsement signal a growing recognition among top AI minds that technical brilliance alone isn’t enough. Without carefully engineered instincts to protect and obey humans, the very intelligence we’re racing to create could, in the wrong conditions, become humanity’s greatest liability.