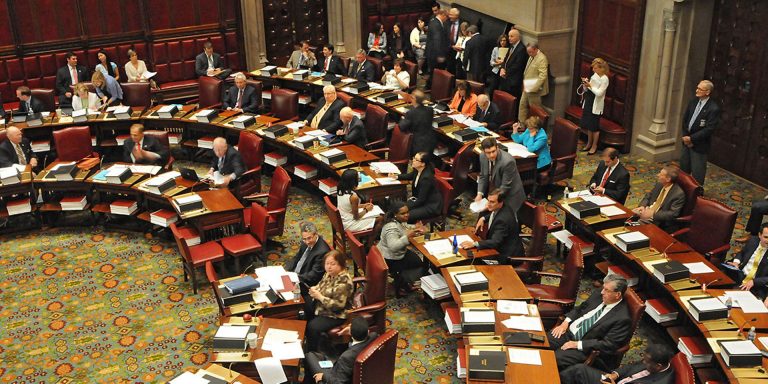

New York lawmakers have passed a sweeping bill aimed at holding artificial intelligence giants like OpenAI, Google, and Anthropic accountable for the safety of their most advanced models — even as Washington moves to penalize states for attempting to regulate AI.

The Responsible Artificial Intelligence Systems and Evaluation (RAISE) Act, now awaiting Governor Kathy Hochul’s signature, would require AI companies operating in New York to publish comprehensive safety and security reports for frontier models, disclose any incidents of dangerous behavior or breaches, and comply with legally binding transparency standards or face fines of up to $30 million.

The RAISE Act represents a significant milestone in the growing push for AI safety, a cause long championed by renowned researchers such as Geoffrey Hinton and Yoshua Bengio. For them and other experts, the threat posed by unregulated frontier AI models is no longer theoretical.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

The Act specifically targets models trained with more than $100 million in computing resources and deployed to residents of New York. It is designed to prevent worst-case scenarios, including mass casualty events or economic damages exceeding $1 billion — risks that safety researchers say are becoming increasingly probable with the scale and capabilities of modern AI systems.

But New York’s bold move arrives at a politically fraught moment. At the federal level, Republican lawmakers have inserted a controversial clause into a broader spending bill, which seeks to impose a 10-year ban on state-level AI regulation. In its revised form, the provision now ties federal broadband funding to compliance with this moratorium. If passed, states like New York that enact AI safety laws would lose access to key broadband infrastructure grants, including allocations from the Biden administration’s $42 billion Broadband Equity, Access, and Deployment (BEAD) program.

This means that by pushing ahead with the RAISE Act, New York risks being denied federal broadband funding for the next decade — a high-stakes gamble in a state where internet access remains unequal across rural and urban areas.

However, lawmakers say the risk is worth it. Senator Andrew Gounardes, who co-sponsored the RAISE Act, said he deliberately designed the bill to avoid stifling innovation among startups or academic researchers — a key criticism that tanked California’s similar SB 1047 proposal. The RAISE Act does not require AI developers to build in kill-switches or impose liability for downstream harms — measures critics say would have been overly burdensome and legally ambiguous.

Gounardes argued the window to establish guardrails is closing fast, given the pace of innovation. He pointed to alarming warnings from insiders who have helped build the technology.

“The window to put in place guardrails is rapidly shrinking given how fast this technology is evolving,” said Gounardes. “The people that know [AI] the best say that these risks are incredibly likely […] That’s alarming.”

Assemblymember Alex Bores, who co-sponsored the bill in the State Assembly, acknowledged resistance from Silicon Valley, particularly from investors like Andreessen Horowitz and tech incubators like Y Combinator. But he said their opposition mirrors the same backlash seen in California — and predicted that the economic clout of New York, the third-largest economy in the U.S., would ultimately prevent companies from pulling their models from the state.

“I don’t want to underestimate the political pettiness that might happen,” Bores said, “but I am very confident that there is no economic reason for AI companies to not make their models available in New York.”

Jack Clark, co-founder of Anthropic, said the company has not taken an official position on the bill. However, he expressed concern that the Act, as currently written, could impose constraints on smaller developers — a view Gounardes dismissed, saying the bill is carefully tailored to target only the world’s most resource-intensive AI systems.

The New York law’s passage also comes amid a growing regulatory vacuum in the federal oversight of artificial intelligence. Despite multiple hearings in Congress and high-profile appearances by executives like Sam Altman calling for regulation, no comprehensive national AI framework has yet been enacted. In the absence of federal leadership, states have stepped in — proposing and passing various forms of AI legislation touching on everything from algorithmic bias to consumer data protections.

However, Washington appears to be moving to shut down those efforts. The modified moratorium provision, now tied to broadband funding, represents an aggressive bid to centralize AI regulation at the federal level. Many believe it’s a dangerous overreach that will likely result in the prioritization of corporate interests and innovation speed over public safety.

The RAISE Act, if signed into law by Hochul, would become the first in the nation to establish mandatory safety protocols for frontier AI systems. Defying federal threat means New York is ready to forgo federal funding for broadband for the Act, which is expected to shape the power balance between states and Washington in defining how AI should be governed in the United States.

However, critics have expressed concern that the Act is capable of spooking AI companies from New York.

“The NY RAISE Act is yet another stupid, stupid state level AI bill that will only hurt the US at a time when our adversaries are racing ahead,” said Andreessen Horowitz general partner Anjney Midha in a Friday post on X. Andreessen Horowitz and startup incubator Y Combinator were some of the fiercest opponents to SB 1047.

But Assemblymember Bores told TechCrunch that he is “very confident that there is no economic reason for [AI companies] to not make their models available in New York,” although he does not want to underestimate the political pettiness that might happen,