ChatGPT became the fastest-growing app when it was launched in 2022, spearheading generative artificial intelligence on its way to becoming the most valuable chatbot. However, the meteoric rise of AI has been shadowed by controversies, including a surge of public anxiety.

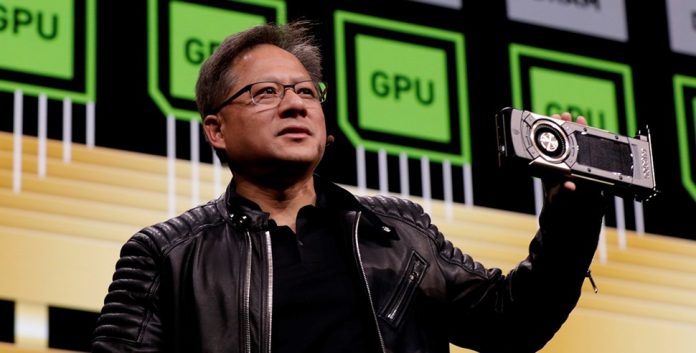

Fears about mass job losses, misinformation, and even civilizational collapse have dominated debates around AI’s future. Nvidia CEO Jensen Huang says that narrative has become excessive — and counterproductive.

Speaking on the No Priors podcast, Huang framed what he called the “doomer narrative” as one of the most damaging forces shaping the AI conversation today. For Huang, whose company supplies the chips that power much of the global AI ecosystem, the issue is no longer just about technical capability, but about how fear is influencing policy, investment, and public trust.

“One of my biggest takeaways from 2025 is the battle of the narratives,” Huang said, describing a widening divide between those who believe AI can broadly benefit society and those who argue it will erode economic stability or even threaten human survival.

He acknowledged that both optimism and caution have a place, but warned that repeated end-of-the-world framing has distorted the debate.

“I think we’ve done a lot of damage with very well-respected people who have painted a doomer narrative — end-of-the-world, science fiction narratives,” Huang said.

While conceding that science fiction has long shaped cultural imagination, he argued that leaning too heavily on those tropes is “not helpful to people, not helpful to the industry, not helpful to society, and not helpful to governments.”

A not-so-subtle rebuke of AI rivals

Although Huang did not name specific individuals, his comments echo earlier public clashes with leaders of other major AI firms — most notably Anthropic CEO Dario Amodei. In June last year, Amodei warned that AI could eliminate roughly half of all entry-level white-collar jobs within five years, potentially pushing unemployment toward 20%. Huang responded at the time that he “pretty much disagree[d] with almost everything” Amodei had said.

That disagreement appears to go beyond economics and into philosophy and policy. On the podcast, Huang argued that AI companies should not be urging governments to impose heavier regulation, saying corporate advocacy for stricter rules often masks competitive self-interest.

“No company should be asking governments for more AI regulation,” Huang said. “Their intentions are clearly deeply conflicted. They’re CEOs, they’re companies, and they’re advocating for themselves.”

The subtext is clear: Huang sees some calls for regulation as an attempt by early AI leaders to lock in advantages, slow rivals, or shape rules in their own favor, rather than a neutral effort to protect society.

Regulation, geopolitics, and the China fault line

The rift between Nvidia and Anthropic has also played out in geopolitics. In May 2025, both companies took opposing stances on U.S. AI Diffusion Rules that restrict exports of advanced AI technologies to countries including China. Anthropic has supported tighter controls and stronger enforcement, highlighting cases of alleged chip smuggling.

Nvidia pushed back sharply, dismissing claims that its hardware had been trafficked into China through elaborate schemes. Huang has repeatedly argued that overly restrictive export controls risk weakening U.S. competitiveness without meaningfully slowing global AI development.

For Huang, this feeds into a broader concern: that fear-driven policymaking, fueled by apocalyptic rhetoric, could end up doing more harm than good.

One of Huang’s most pointed warnings was that relentless pessimism about AI could actually increase risks rather than reduce them. He argued that fear discourages investment in the very research and infrastructure needed to make AI systems safer, more reliable, and more socially useful.

“When 90% of the messaging is all around the end of the world and pessimism,” Huang said, “we’re scaring people from making the investments in AI that make it safer, more functional, more productive, and more useful to society.”

In Huang’s view, safety does not come from paralysis or blanket restriction, but from sustained development, testing, and deployment — all of which require capital, talent, and public confidence.

An industry divided from within

Huang is not alone among tech leaders expressing frustration with the tone of the AI debate. Microsoft CEO Satya Nadella has criticized what he sees as dismissive conversations that reduce AI output to “slop,” while Mustafa Suleyman, head of Microsoft’s AI division, described widespread public criticism of AI as “mind-blowing” late last year.

Yet the backlash is rooted in tangible outcomes, not just abstract fear. Estimates suggest that more than 20% of YouTube content now consists of low-quality or spam-like AI-generated material, while layoffs tied to automation and AI adoption continue to ripple through media, tech, and customer service roles. For many workers, skepticism reflects lived experience rather than science fiction.

Based on Huang’s remarks, the disagreement is no longer simply about how fast AI should advance, but about who gets to define its risks, who shapes regulation, and whether caution is a necessary brake or an overreaction that could blunt progress. The Nvidia CEO believes the danger lies in allowing fear to dominate the conversation. He argues that excessive pessimism risks slowing innovation, weakening competitiveness, and ironically making AI less safe in the long run.