The term “cascading use model” isn’t a widely standardized phrase in GPU literature as of November 2025, but it aptly describes an emerging paradigm in GPU utilization where computational resources are reused and repurposed in a sequential, layered, or “cascading” fashion across different workloads, users, or stages of processing.

This model is particularly relevant in the context of AI, high-performance computing (HPC), and data centers, where GPU scarcity driven by demand from training large language models, simulations, and edge inference has pushed innovations in resource efficiency.

In traditional GPU usage, a high-end chip like an NVIDIA H100 or AMD MI300X might be dedicated to a single task (e.g., training a neural network for hours or days), leading to idle time and underutilization. The cascading model flips this.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

Sequential Reuse: A GPU processes heavy compute tasks first (e.g., model training), then “cascades” the hardware to lighter tasks (e.g., inference, fine-tuning, or even non-AI workloads like video rendering).

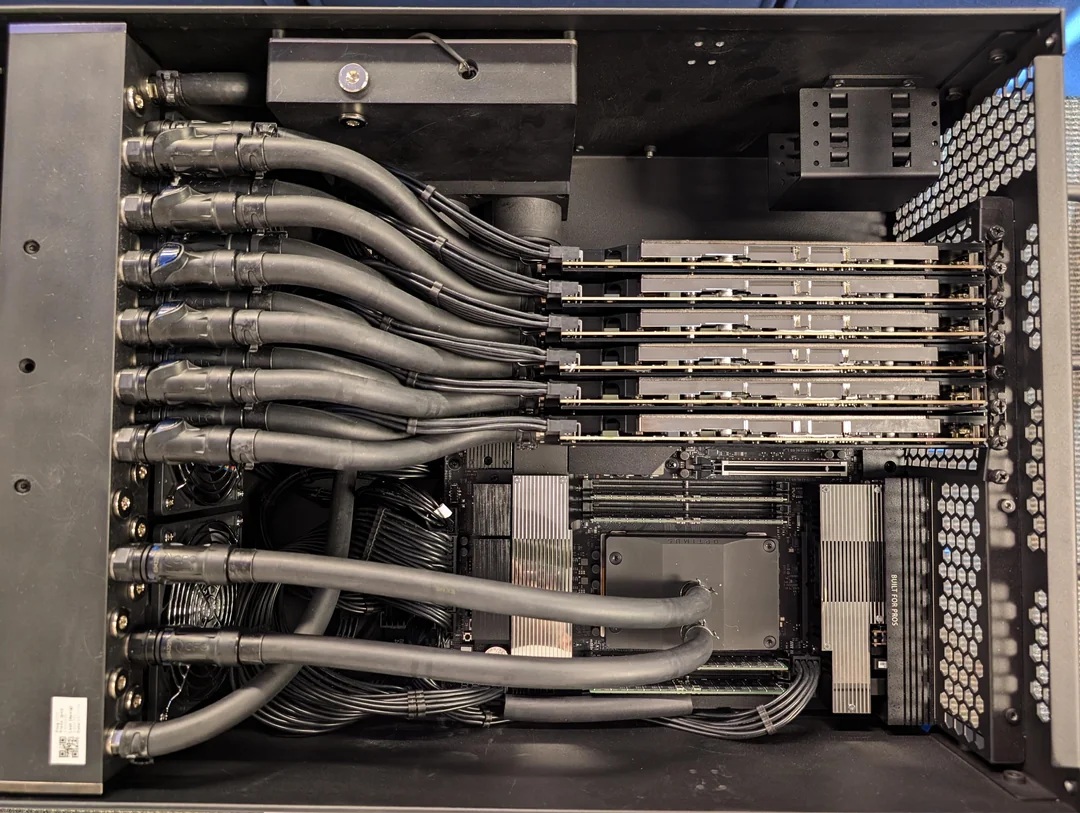

Layered Allocation: Resources are dynamically partitioned—e.g., using time-slicing, MIG (Multi-Instance GPU) partitioning, or SR-IOV virtualization—to serve multiple users or applications in a waterfall-like hierarchy, where overflow from one layer feeds the next.

It emphasizes minimizing waste by cascading underutilized cycles, often integrated with software like Kubernetes schedulers or NVIDIA’s CUDA Multi-Process Service (MPS).

This contrasts with the “dedicated silo” model, where GPUs sit idle 50-70% of the time in many data centers. GPUs are experiencing a surge in value and adoption under this model for several substantiated reasons.

Data centers report 2-3x higher throughput. For instance, hyperscalers like Google and AWS have adopted cascading via tools like NVIDIA’s GPU Operator, boosting effective FLOPS floating-point operations per second by repurposing chips post-training for serving APIs.

With GPU prices hovering at $30,000+ per unit, cascading can cut effective costs by 40-60% through better amortization, making AI infrastructure more accessible to mid-sized firms.

Scalability for AI Workloads

In the AI boom, training which dominates 80% of GPU cycles cascades naturally to inference the “serving” phase, where models are queried billions of times daily. This is evident in systems like Grok’s backend, where xAI leverages cascading to handle real-time queries after model updates.

Consumer GPUs (e.g., RTX 40-series) benefit in gaming rigs that cascade from ray-tracing renders to AI upscaling via DLSS, extending hardware lifespan. GPUs consume massive power up to 700W per card, contributing to data center energy demands rivaling small countries.

Cascading reduces total hardware needs, lowering carbon footprints—e.g., Microsoft’s Azure reports 25% energy savings via dynamic cascading in their AI clusters. Open-source frameworks like Ray or Dask enable cascading across hybrid CPU-GPU setups, democratizing access.

NVIDIA’s GTC 2025 keynote emphasized “cascading compute fabrics” in Blackwell architectures, with partnerships like Meta’s Llama deployments showing 1.5x inference speedups. GPU shipment forecasts from Jon Peddie Research predict a 15% YoY growth in enterprise segments, partly attributed to cascading-enabled virtualization, countering supply constraints from TSMC fabs.

Not all workloads cascade seamlessly—e.g., real-time graphics may conflict with ML pipelines—requiring advanced orchestration like Kubernetes with GPU plugins.

In summary, the cascading use model is transforming GPUs from expensive bottlenecks into flexible powerhouses, fueling the AI revolution while addressing efficiency hurdles.