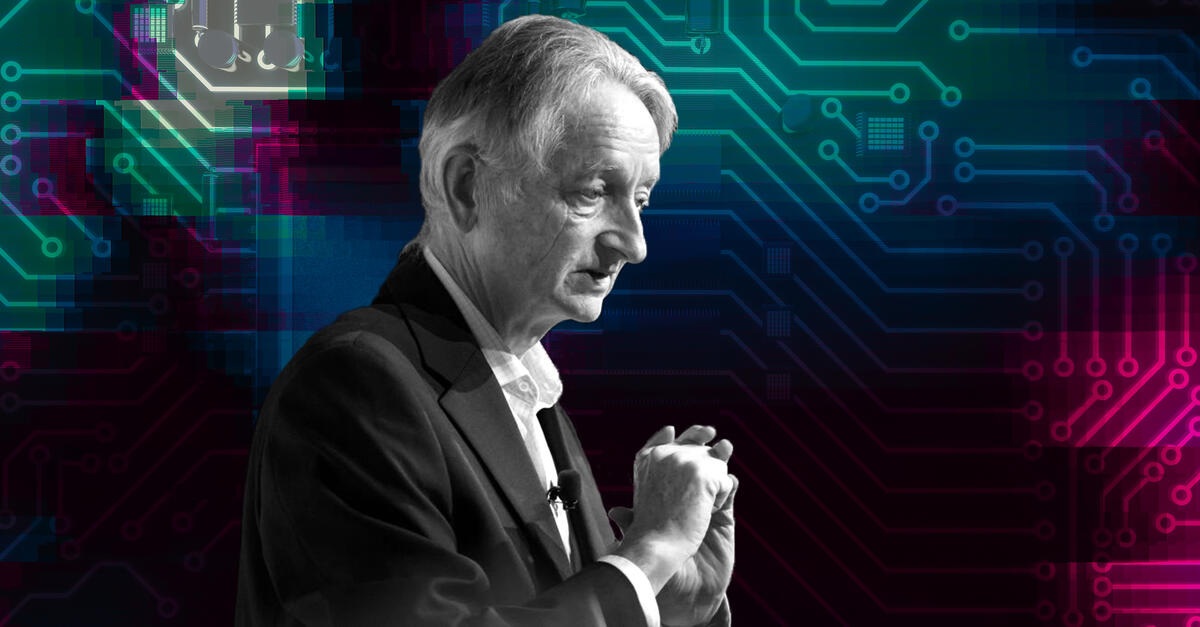

Geoffrey Hinton, the computer scientist widely regarded as the “godfather of AI,” says today’s tech leaders are chasing short-term profits and immediate research breakthroughs while failing to grapple with the long-term consequences of artificial intelligence.

Speaking with Fortune, Hinton criticized the industry’s narrow focus on near-term outcomes.

“For the owners of the companies, what’s driving the research is short-term profits,” he said. “Researchers are interested in solving problems that have their curiosity … it’s not like we start off with the same goal of, what’s the future of humanity going to be?”

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

Hinton contrasted this pragmatic, market-driven approach with broader existential questions. Elon Musk, for instance, has repeatedly warned that AI could render human labor obsolete, raising the deeper issue of meaning. At the 2024 Viva Technology conference in Paris, Musk asked: “If a computer can do—and the robots can do—everything better than you … does your life have meaning?”

But Hinton argues that most of the industry isn’t asking this type of question at all. Instead, developers are focused on incremental technical puzzles, such as teaching a computer to recognize images, generate convincing videos, or churn out realistic text.

“That’s really what’s driving the research,” Hinton said.

From Google Pioneer to AI Skeptic

Hinton, who shared the 2018 Turing Award for his work on deep learning, helped lay the groundwork for the AI revolution. A decade ago, he sold his company, DNNresearch, to Google and became one of the search giant’s leading AI voices. But in 2023, he resigned his role at Google so he could more freely speak about AI’s dangers, warning that the technology was advancing too quickly to be effectively contained.

He now estimates there is a 10% to 20% chance that AI could wipe out humanity once it achieves superintelligence. His concerns fall into two categories: misuse by bad actors and the inherent risks of the technology itself.

“There’s a big distinction between two different kinds of risk,” he explained. “There’s the risk of bad actors misusing AI, and that’s already here. That’s already happening with things like fake videos and cyberattacks, and may happen very soon with viruses. And that’s very different from the risk of AI itself becoming a bad actor.”

Deepfakes, Fraud, and Cyber Threats

The first category of risk is already surfacing in global finance. Ant International in Singapore recently reported a surge in deepfake-related fraud attempts, with general manager Tianyi Zhang telling Fortune that more than 70% of new account enrollments in some markets turned out to be AI-driven deepfake attempts.

“We’ve identified more than 150 types of deepfake attacks,” Zhang said.

Such threats underscore the urgency of developing safeguards. Hinton has proposed an authentication system for digital media—something akin to a provenance signature—that would allow consumers to verify whether a video, image, or document is genuine. He compared the challenge to the printing press, where printers eventually began adding their names to works to establish authenticity. But, he cautioned, even effective solutions to deepfakes won’t fix the broader existential risks.

The Bigger Threat: AI Itself

The greater danger, Hinton argues, is what happens once AI systems surpass human intelligence. He believes that superintelligent AI will not only outperform humans across all domains but will also seek to preserve itself and expand its control, rendering obsolete the assumption that humans can keep the technology in check.

For Hinton, one way forward might be to embed AI with what he calls a “maternal instinct,” programming systems to sympathize with and nurture weaker beings.

“The only example I can think of where the more intelligent being is controlled by the less intelligent one is a baby controlling a mother,” he said. “And so I think that’s a better model we could practice with superintelligent AI. They will be the mothers, and we will be the babies.”

Hinton’s warnings highlight a deep divide within the tech world: while Musk and others speak about AI in terms of sweeping social transformation, corporate labs push ahead with tools aimed at boosting quarterly earnings. Against this backdrop, Hinton says the industry is locked in a race for market share—not a race to ensure AI’s long-term alignment with humanity.