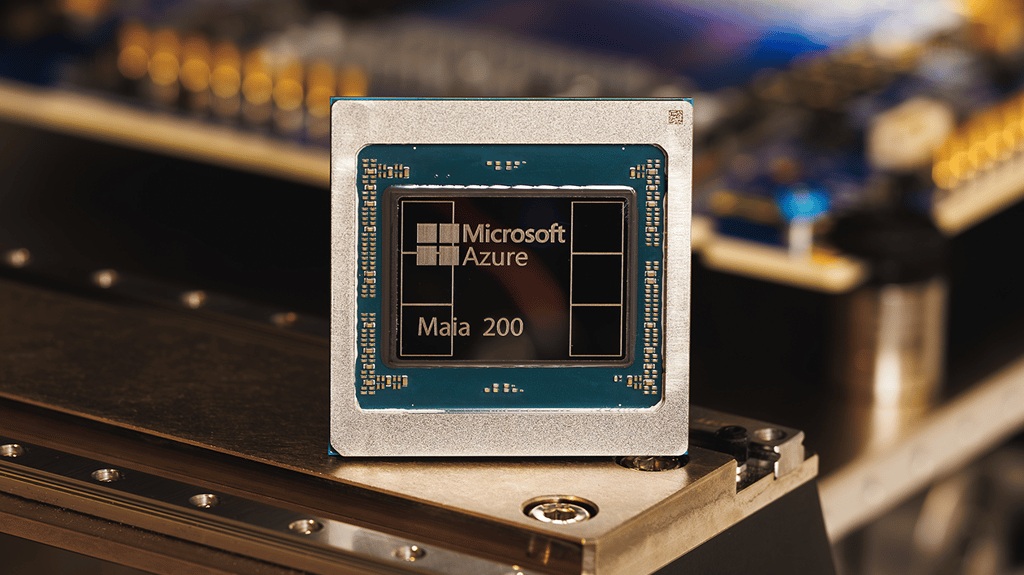

Microsoft Corp. has begun deploying its second-generation artificial intelligence chip, Maia 200, marking a major step in the company’s long-term effort to build its own AI hardware, rein in soaring computing costs, and reduce dependence on Nvidia’s dominant processors.

The Maia 200 chip, produced by Taiwan Semiconductor Manufacturing Co., is now being deployed in Microsoft data centers in Iowa, with further rollouts planned for the Phoenix area. Microsoft has invited developers to begin using the chip’s control software, though it has not said when Azure cloud customers will be able to directly access Maia-powered servers.

Some of the earliest Maia 200 units will be allocated to Microsoft’s superintelligence team, where they will generate data to help improve the next generation of AI models, according to cloud and AI chief Scott Guthrie. The chips will also power Microsoft’s Copilot assistant for businesses and support AI models, including OpenAI’s latest systems, that the company offers to enterprise customers through Azure.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

At one level, Maia 200 is about performance and efficiency. Microsoft says the chip outperforms comparable offerings from Google and Amazon Web Services on certain AI tasks, particularly inference, the process of running trained models to generate answers. Guthrie described Maia 200 as “the most efficient inference system Microsoft has ever deployed,” a pointed claim at a time when power consumption has become one of the biggest constraints on AI expansion.

But at a strategic level, Maia 200 reflects a much broader shift across Big Tech. As AI workloads explode, the cost of buying and running Nvidia’s industry-leading GPUs has become a central concern. Demand continues to outstrip supply, prices remain high, and access to the most advanced chips has become a competitive differentiator. Designing custom chips gives hyperscalers more control over costs, performance, and supply chains, while allowing tighter integration with their own software and data center architectures.

Microsoft is not the only Big Tech company toeing this path. Amazon has spent years developing its own processors, including Trainium chips for AI training and Inferentia chips for inference, which it markets as lower-cost alternatives to Nvidia hardware for certain workloads on AWS. Google has long relied on its Tensor Processing Units, or TPUs, which are deeply embedded in its data centers and underpin many of its AI services, including search and generative models.

Meta Platforms has also joined the push, developing its own in-house AI accelerator, known as MTIA, to support recommendation systems and generative AI workloads across Facebook, Instagram, and other services. Apple, while focused more on on-device intelligence than data centers, has built its Neural Engine into iPhones, iPads, and Macs, giving it tight control over how AI features run on its hardware.

Together, these efforts point to a clear trend: AI has become too central, too expensive, and too power-hungry for the largest technology firms to outsource entirely to third-party chipmakers.

Microsoft’s late start in custom silicon compared with Amazon and Google has not diminished its ambitions. The company says it is already designing Maia 300, the next generation of its AI chip, indicating a long-term roadmap rather than a one-off experiment. It also has a fallback option through its close partnership with OpenAI, which is exploring its own chip designs, potentially giving Microsoft access to alternative architectures if needed.

Analysts say energy efficiency is now as important as raw computing power. AI data centers consume vast amounts of electricity, and in many regions, new power generation and grid capacity are not keeping pace. Gartner analyst Chirag Dekate said projects like Maia are becoming essential as power constraints tighten.

“You don’t engage in this sort of investment if you’re just doing one or two stunt activities,” he said. “This is a multigeneration, strategic investment.”

For Microsoft, the implications extend beyond cost savings. Custom chips strengthen its negotiating position with suppliers, reduce exposure to supply shortages, and allow it to tailor hardware more precisely to workloads such as Copilot, Azure AI services, and OpenAI models. Over time, that could translate into more predictable pricing and performance for enterprise customers, a key advantage as businesses scale AI across operations.

Maia 200 does not mean Microsoft is abandoning Nvidia. GPUs will remain critical, especially for the most demanding training tasks. Instead, the chip represents a hedge and a pressure valve, part of a diversified hardware strategy designed to keep AI growth economically viable.

The deployment of Maia 200 reinforces a clear message: Microsoft is no longer content to be just a buyer of AI hardware. It wants to shape the economics of AI computing itself, even as competition intensifies and the cost of staying at the cutting edge continues to rise.