Two days after news surfaced that Nvidia had struck what amounts to a $20 billion deal with Groq, the most striking aspect of the transaction is not just its size, but how little noise accompanied it.

There was no press release from Nvidia. No regulatory filing. No investor call. Instead, confirmation has come indirectly through a 90-word blog post published by Groq late on Christmas Eve and from people familiar with the deal. Nvidia, now the world’s most valuable company, has simply acknowledged that the blog post accurately reflects the arrangement.

“They’re so big now that they can do a $20 billion deal on Christmas Eve with no press release and nobody bats an eye,” Bernstein analyst Stacy Rasgon said Friday on CNBC’s Squawk on the Street, underscoring how normalized mega-deals have become in the AI era.

Register for Tekedia Mini-MBA edition 19 (Feb 9 – May 2, 2026).

Register for Tekedia AI in Business Masterclass.

Join Tekedia Capital Syndicate and co-invest in great global startups.

Register for Tekedia AI Lab.

According to CNBC, which cited Groq lead investor Alex Davis, Nvidia agreed to spend $20 billion in cash to acquire key assets and talent from Groq under what the startup described as a “non-exclusive licensing agreement.” Neither company has formally confirmed the price tag, but Davis’ firm, Disruptive, has invested more than $500 million in Groq since its founding and led its most recent funding round in September, when the company was valued at $6.9 billion.

Under the deal, Groq founder and CEO Jonathan Ross, company president Sunny Madra, and several senior leaders will join Nvidia to help scale the licensed technology. Groq will continue operating as an independent company, led by finance chief Simon Edwards, and its cloud business remains outside the transaction.

Had Nvidia pursued a conventional acquisition, Groq would have become by far the largest deal in the chipmaker’s 32-year history. Nvidia’s biggest prior purchase was the $7 billion acquisition of Israeli networking firm Mellanox in 2019, a move that later proved critical to its data center strategy. Instead, Nvidia opted for a structure increasingly favored across Big Tech: paying billions to secure people and technology without formally buying the company.

This approach has been used repeatedly over the past two years by Meta, Google, Microsoft, and Amazon, particularly in AI, where competition for talent and intellectual property has intensified while regulatory scrutiny has grown. Nvidia itself used the same playbook in September, when it spent more than $900 million to hire Enfabrica CEO Rochan Sankar and other employees while licensing the startup’s networking technology.

By avoiding a full acquisition, companies reduce the risk of prolonged antitrust reviews and can close deals faster, while still locking up capabilities competitors might otherwise access. Rasgon noted in a client memo that antitrust risk is the most obvious issue surrounding the Groq transaction, but said the non-exclusive licensing structure may be enough to keep regulators at arm’s length.

Nvidia shares rose about 1% on Friday to $190.53. The stock is up roughly 42% this year and more than thirteenfold since late 2022, when generative AI exploded into the mainstream after the launch of OpenAI’s ChatGPT. Nvidia’s cash position has ballooned alongside that rally. At the end of October, the company held $60.6 billion in cash and short-term investments, up from $13.3 billion in early 2023.

That expanding war chest has allowed Nvidia to spread capital aggressively across the AI ecosystem, including investments in OpenAI and Intel, while tightening its grip on critical parts of the hardware stack.

The strategic logic behind Groq sits squarely in the next phase of AI. Nvidia dominates AI training, where massive amounts of data are processed to teach models how to recognize patterns. Groq’s strength lies in inference — the stage where trained models process new information to generate responses in real time. As AI moves from experimentation to deployment, inference is becoming more commercially important, with customers prioritizing speed, efficiency, and cost.

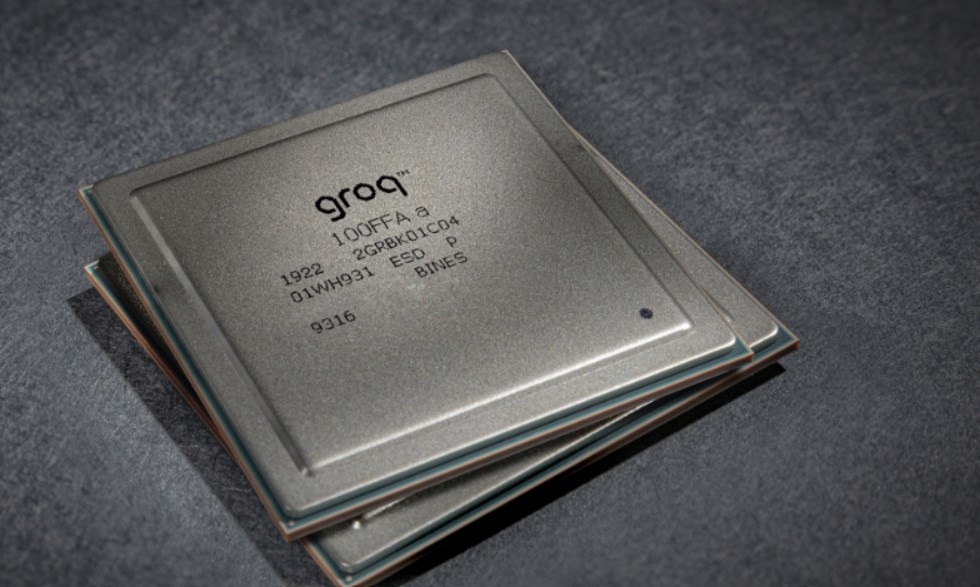

Groq was founded in 2016 by former Google engineers, including Ross, who helped create Google’s tensor processing units, or TPUs. Those custom chips have emerged as one of the few credible alternatives to Nvidia’s graphics processing units in certain AI workloads. Groq’s language processing units were designed specifically for low-latency inference, positioning the company as a potential rival or acquisition target for any firm seeking to challenge Nvidia’s dominance.

That background helps explain why analysts see the deal as both offensive and defensive. Cantor analysts said in a note Friday that Nvidia is strengthening its full system stack while ensuring Groq’s assets do not end up in the hands of a competitor. They maintained a buy rating and a $300 price target, saying the transaction widens Nvidia’s competitive moat.

BofA Securities analysts also reiterated their buy rating and $275 target, calling the deal “surprising, expensive but strategic.” They said it reflects Nvidia’s recognition that while GPUs have ruled AI training, the industry’s rapid shift toward inference may demand more specialized chips.

At the same time, unanswered questions linger. Analysts have pointed to uncertainty around who controls Groq’s core intellectual property, whether the licensed technology can be made available to Nvidia competitors, and whether Groq’s remaining cloud business could eventually compete with Nvidia’s own AI services on price.

Nvidia has declined to comment on those details. The next opportunity for investors to hear directly from the company will likely come on Jan. 5, when CEO Jensen Huang is scheduled to speak at CES in Las Vegas.

Until then, the Groq deal stands as a clear signal of how Nvidia now operates at scale: moving quietly, spending decisively, and shaping the future of AI hardware without feeling the need to explain every move in real time.