The crypto market has regained its bullish momentum following Trump’s positive stance on the Fed rate cut. Ethereum (ETH) is again leading the charge, with a mid-July breakout, as investors eye the $5,000 milestone.

However, while ETH is reclaiming its dominance among large-cap cryptocurrencies, savvy investors are turning toward lesser-known but high-upside plays, such as Little Pepe (LILPEPE), a sub-$0.002 meme coin that’s defying the odds with real utility, breakout potential, and a growing community. With over $13.2 million raised in its presale and more than 9.3 billion tokens sold, Little Pepe is no longer a hidden gem; it’s a rising star. As Ethereum gears up for its next leg higher, LILPEPE is positioned as the next best low-cost mover, with 1000x upside potential.

Presale Momentum and Growing Ecosystem Back LILPEPE’s Rise

The presale success of Little Pepe isn’t luck. It results from precise execution, viral community traction, and a vision that resonates across meme coin enthusiasts and serious crypto investors. Now in Stage 8, with 91% of the tokens sold and the current price up 70% from Stage 1, the LILPEPE presale has outpaced many of its peers in both capital raised and speed of sale. Early buyers are already sitting on notable gains, and with the token set to list at $0.003, even late presale participants have a clear upside.

Backing this momentum is more than hype. The project has launched a $777,000 giveaway, offering 10 lucky winners $77,000 each in tokens. It has also completed a smart contract audit, been listed on CoinMarketCap, and is preparing for CEX listings post-presale, with clear credibility indicators and a well-executed roadmap.

Ethereum Eyes $5,000 as Institutional Floodgates Open

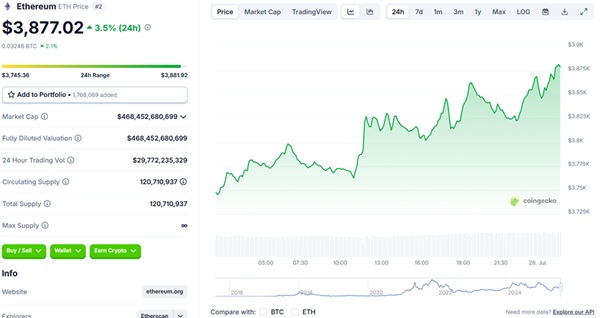

Ethereum’s price action in July has been explosive. After breaking out of a 3.7-year descending trendline and forming a bullish wedge, ETH surged from $2,090 to over $3,700, stabilizing above $3,870.

Ethereum Price Chart | Source: CoinGecko

Analysts noted that a weekly close above $4,000 opens the gates to $4,800 and even $5,000, driven by technical patterns and on-chain strength. What’s pushing this rally? Institutional demand.

Ethereum ETFs have logged a record-breaking 17-day inflow streak, with over $2.4 billion in inflows last week alone, surpassing the inflows of Bitcoin ETFs. BlackRock’s ETHA ETF pulled in $440 million in a single day.

Even Ethereum’s Layer-2 networks are booming. Arbitrum, Optimism, and zkSync drive transaction volume and scale the Ethereum network, which now handles over $10 billion in weekly on-chain activity. If the momentum continues into August, historically ETH’s strongest month, we could see Ethereum reach its $5,000 target sooner than most expect.

How Little Pepe Is Rewriting the Meme Playbook

While Ethereum leads on the institutional front, Little Pepe is rewriting how meme coins operate, starting with utility first. At the core of the LILPEPE ecosystem is its Layer 2 EVM-compatible blockchain, designed to provide meme coins and micro-cap tokens with a scalable and low-cost infrastructure. This is a first in the meme space, where most tokens lack a technical foundation.

Built on this Layer 2 is the Pepe Launchpad, a no-code token launch platform that allows anyone to create and deploy their meme tokens safely and quickly. With sniper bot resistance, 0% buy/sell tax, and a clear roadmap, Little Pepe appears as a meme-powered launch engine for the next wave of community tokens.

This makes it even more attractive because all these utilities are centered around the $LILPEPE token, an ERC-20 coin, ensuring continuous demand for use cases as the platform grows. The project also leverages meme virality, boasting a strong social presence and consistent traction across platforms like X (formerly Twitter), Telegram, and TikTok.

Best Breakout Candidate Below $0.002? Here’s Why It’s LILPEPE

With its current price below $0.002, Little Pepe offers one of the lowest barriers to entry in the entire meme coin sector and one of the highest upside potentials.

Why?

- Planned Tier-1 CEX Listings will expose LILPEPE to global retail demand.

- Sustainable long-term utility project, unlike pump-and-dump meme coins.

- Its Layer 2 blockchain and Launchpad create real infrastructure for developers and meme coin fans.

- The current presale price ($0.0017) is expected to double on listing, and that’s just the beginning.

As Ethereum races to $5,000, attention will inevitably shift to lower-cap projects with viral energy and genuine fundamentals. LILPEPE sits at the intersection of both. With a strong roadmap, presale traction, and technical features rarely seen in meme projects, it’s primed to be the best low-cost mover of 2025.

Conclusion

Ethereum’s march toward $5,000 is strengthening with every ETF inflow, whale buy, and technical confirmation. But Little Pepe stands out as the top cheap crypto to watch in 2025 for those seeking bigger percentage returns. Its under-$0.002 price point, Layer-2 tech stack, and meme-market appeal give it breakout potential unmatched by most altcoins. Join the Little Pepe Presale now, before the price climbs again!

For more information about Little Pepe (LILPEPE) visit the links below:

Website: https://littlepepe.com

Whitepaper: https://littlepepe.com/whitepaper.pdf

Telegram: https://t.me/littlepepetoken

Twitter/X: https://x.com/littlepepetoken