10 Gbps Ethernet (10GbE) has established itself as the standard way to connect server cards to the top-of-rack (ToR) switch in data-center racks. So what’s it doing in the architectural plans for next-generation embedded systems? It is a tale of two separate but connected worlds.

Inside the Data Center

If we can say that a technology has a homeland, then the home turf of 10GbE would be inside the cabinets that fill data centers. There, the standard has provided a bridge across a perplexing architectural gap.

Data centers live or die by multiprocessing: their ability to partition a huge task across hundreds, or thousands, of server cards and storage devices. And multiprocessing in turn succeeds or fails on communications—the ability to move data so effectively that the whole huge assembly of CPUs, DRAM arrays, solid-state drives (SSDs), and disks acts as if they were one giant shared-memory, many-core system.

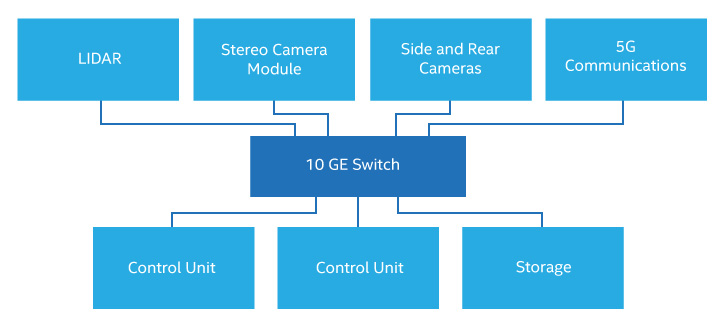

Figure 1. Autonomous vehicles, for example, can generate a deluge of high-speed data.

This need puts special stress on the interconnect fabric. Obviously it must offer high bandwidth at the lowest possible latency. And since the interconnect will touch nearly every server and storage controller card in the data center, it must be inexpensive—implying commodity CMOS chips—compact, and power efficient.

And the interconnect must support a broad range of services. Blocks of data must shuffle to and from DRAM arrays, SSDs, and disks. Traffic must pass between servers and the Internet. Remote direct memory access (RDMA) must allow servers to treat each other’s memory as local. Some tasks may want to stream data through a hardware accelerator without using DRAM or cache on the server cards. As data centers take on network functions virtualization (NFV), applications may try to reproduce the data flows they enjoyed in hard-wired appliances.

Against these needs stand a range of practical constraints. Speed and short latency cost money. What is technically achievable on the lab bench may not be feasible for 50,000 server cards in a warehouse-sized data center. Speed and distance trade off—the rate you can obtain over five meters may be impossible across hundreds of meters. And on the whole, copper twisted pairs are cheaper than optical fibers. Finally, flexibility matters: no one wants to rip out and replace a data center network to accommodate a new application.

After blending these needs and constraints, data-center architects generally came to similar conclusions (Figure 2). They connected all the server, storage, and accelerator cards in a rack together through 10GbE over twisted pairs to a ToR switch. Then they connected all the ToR switches in the data center together through a hierarchy of longer-range optical Ethernet networks. The Ethernet protocol allowed use of commodity interface hardware and robust software stacks, while giving a solid foundation on which to overlay more specialized services like streaming and security.

Figure 2. A typical data center, before upgrade to faster networks, uses 10GbE for interconnect within a server rack.

Today, the in-rack links are evolving from 10 Gbps to 25 or 40 Gbps. But the 10GbE infrastructure has been deployed, cost-reduced, and field-proven, and is ready to seek new uses.

Embedded Evolution

As 10GbE was solidifying its role in server racks, an entirely different change vector was growing in the embedded world. Arguably, the change started in systems that were already dependent on video—ranging from broadcast production facilities to machine-vision systems. The driving force was the growing bit rate of the raw video signal coming off of the cameras.

Perhaps the first application domain to feel the pain was broadcast, where 1080p video demanded almost 3 Gbps. The industry responded with its own application-specific serial digital interface (SDI). But as production facilities and head-ends grew more and more to resemble data centers, the pressure to transport multiple video streams over standard network infrastructure grew. And 10GbE was a natural choice. The progression from 1080p to 4K HD only accelerated the move.

But video cameras were used in machine vision as well. Some applications were fine with standard-definition monochrome video at low scan rates. But in many cases, the improved resolution, frame rate, dynamic range, and color depth of HD enabled significantly better performance for the overall system. How, then, to transport the video?

For systems only interested in edge extraction or simple object recognition, and for uses like surveillance, where the vast majority of data is discarded immediately, local vision processing at the camera is an obvious solution. With relatively simple hardware, such local processing can slash the required bandwidth between the camera and the rest of the system, bringing it within range of conventional embedded or industrial busses. And in many other cases local video compression at the camera can substantially reduce bandwidth requirements without harming the application.

Not every situation is so cooperative. Broadcast production studios are loath to throw away any bits—if they use compression at all, they want it to be lossless. Motion-control algorithms may need edge-location data at or even below pixel-level resolution, requiring uncompressed data. And convolutional neural networks, the current darlings of leading-edge design, may rely on pixel-level data in ways completely opaque to their designers. So you may have no choice but to transfer all of the camera data.

Even in situations where compression is possible, a module containing multiple imaging devices—say, several cameras and a lidar in an autonomous vehicle, for example—can eat up more than 1 Gbps quite easily just sending preprocessed image data.

And crossing that 1 Gbps boundary is a problem, if you had planned to connect your high-bandwidth device into the system with Ethernet. Once you exceed the aggregate capacity of a1 Gbps Ethernet link, the next step is not 2, it is 10. Hence, the growing importance of 10GbE. But even with its economies of scale and ability to use twisted-pair or backplane connections, the step up to 10GbE means more expensive silicon and controller boards. It’s not a trivial migration.

Get a Backbone

In many systems, 10GbE can handle not only the fastest I/O traffic in the design, but all the fast system I/O (Figure 3). For simplicity, reliability, cost, and weight, they can be a big enough advantage to justify the cost and power of the interfaces. For example, linking all the major modules in an autonomous vehicle—cameras, lidar, location, chassis/drivetrain, safety, communications, and electronic control unit—through a single 10GbE network could eliminate many meters and several kilograms of wiring. Compared to the growing tangle of dedicated high-speed connections today—often requiring hand-installation of wiring harnesses—the unified approach can be a big win.

Figure 3. In an embedded system, 10GbE can provide a single backbone interconnect for a variety of high-bandwidth peripherals.

But unifying system interconnect around a local Ethernet network also presents issues. One, ironically, is the very issue that motivated interest in 10GbE in the first place: bandwidth. A machine-vision algorithm consuming the raw output of two HD video cameras would already be using over half the available bandwidth of a 10GbE backbone. So in systems with multiple multi-Gbps data flows, there are some hard choices to make. You can employ multiple 10GbE connections as point-to-point links. Or, if the algorithms can tolerate the latency, you can use local compression or data analytics at the source to reduce bandwidth needs—partitioning vision processing between camera and control unit, for example.

Another issue is cost. A small, low-bandwidth sensor may not be a sensible candidate for a $250 10GbE interface, or even a $50 chip. You may want to consolidate a number of such devices on one concentrator, or simply provide a separate, low-bandwidth industrial bus for them.

Timing is Everything

In abstract we have offered a promising scenario. Data centers have built up a huge infrastructure of chips, media, and boards behind 10GbE. Now the giant computing facilities are moving on to 25 or 40GbE, and all that infrastructure will go looking for new markets. At the same time, data rates in some embedded systems have sped past the bounds of frequently-used 1GbE links, hinting at just the sort of opportunity the 10GbE vendors are seeking.

But reality doesn’t dwell in abstracts. In particular, the real embedded world cares about latency and other quality of service parameters. If a data-center ToR switch frequently shows unexpected latencies, the worst result is likely to be slightly longer execution times—and hence higher costs—for workloads there were never time-critical. In the embedded world, if you miss a deadline you break something—usually something big and expensive.

This is a long-understood issue with networking technology in embedded systems. And it has an established solution: the cluster of IEEE 802.1 standards collectively known as time-sensitive networking (TSN). TSN is a set of additions and changes to the 802.1 standards at Layer 2 and above that allow, in effect, Ethernet to offer guaranteed levels of service in addition to its customary best-effort service.

So far, three elements of TSN have been published: 802.1Qbv Enhancements for Scheduled Traffic, 802.1Qbu Frame Preemption, and 802.1Qca Path Control and Reservation. Each of these defines a service critical to using Ethernet in a real-time system.

One service is the ability to pre-define a path through the network for a virtual connection, rather than entrusting each packet to best-effort forwarding at each hop. By itself this facility may not be that useful in embedded systems, where the entire network is often a single switch.

But other parts of this spec are more relevant: the ability to reserve bandwidth or stream treatment for a connection and to provision redundancy to ensure delivery. Another service is the ability to pre-schedule transmission of frames for a connection on a network also carrying prioritized and best-effort traffic. And yet another element defines a mechanism for preempting a frame in order to transmit a scheduled or higher-priority frame. Together these capabilities allow a TSN network to guarantee bandwidth to a virtual connection, to create a virtual streaming connection, or to guarantee maximum latency for frames over a virtual connection.

Since TSN is essentially an overlay on 802.1 networks, TSN over 10GbE is feasible. At least one vendor has already announced a partially TSN-capable 10GbE media access controller (MAC) intellectual property (IP) core that works with standard physical coding sublayer IP and transceivers. So it is possible to implement a 10GbE TSN backbone now with modest-priced FPGAs or an ASIC.

Using 10GbE for system interconnect in an embedded design is no panacea. And employing TSN extensions to meet real-time requirements may preclude using exactly the same Layer-2 solutions that data centers use. But for embedded designs such as autonomous vehicles or vision-based machine controllers that must support high internal data rates, 10GbE as point-to-point links or as backbone interconnect may be an important alternative.

By Altera Training

Like this:

Like Loading...